Research:

- Notice: glsl mod() is different from hlsl fmod()

Research:

Monday 07/24

Finish user study survey.

Tuesday 07/25

Revised user study.

Try interpolation

Wednesday 07.26

Finish interpolation, try to debug the strange lines (failed)

Thursday 07/27

Solve the bug 306 & 400 for vive by:

Go to GLAO and ask about the parking ticket.

Try TAA with variance sampling to solve the flickers.

Description:

https://leetcode.com/problems/shortest-unsorted-continuous-subarray/#/description

Algorithm:

Only need to care about the start index and end index

Code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

class Solution { public: int findUnsortedSubarray(vector& nums) { if (nums.empty() || nums.size() == 1) { return 0; } int n = nums.size(); int cmin = nums[n - 1], indexmin = n - 1; for (int i = n - 1; i >= 0; --i) { if (nums[i] > cmin) { indexmin = i; } else { cmin = nums[i]; } } int cmax = nums[0], indexmax = 0; for (int i = 0; i < n; ++i) { if (nums[i] < cmax) { indexmax = i; } else { cmax = nums[i]; } } return indexmax > indexmin ? indexmax - indexmin + 1 : 0; } }; |

Time & Space:

O(n) & O(1)

|

1 |

Description:

https://leetcode.com/problems/game-of-life/#/description

Algorithm:

Use state machine to finish in-place.

Code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

class Solution { public: void gameOfLife(vector<vector>& board) { int m = board.size(); if (m == 0) return; int n = board[0].size(); if (n == 0) return; for (int i = 0; i < m;i++) { for (int j = 0; j < n;j++) { if (changeState(board, i, j, m, n)) { if (board[i][j] % 2 == 1) board[i][j] = 1; else board[i][j] = 3; } else { if (board[i][j] % 2 == 1) board[i][j] = 2; else board[i][j] = 0; } } } for (int i = 0; i < m;i++) { for (int j = 0; j < n;j++) { board[i][j] = board[i][j] % 2; } } } private: bool changeState(vector<vector>board, int i, int j, int m, int n) { int count = 0; if (i - 1 >= 0 && j - 1 >= 0 && (board[i - 1][j - 1] == 1 || board[i - 1][j - 1] == 2)) count++; if (i - 1 >= 0 && (board[i - 1][j] == 1 || board[i - 1][j] == 2)) count++; if (i - 1 >= 0 && j + 1 < n && (board[i - 1][j + 1] == 1 || board[i - 1][j + 1] == 2)) count++; if (j + 1 < n && (board[i][j + 1] == 1 || board[i][j + 1] == 2)) count++; if (j - 1 >= 0 && (board[i][j - 1] == 1 || board[i][j - 1] == 2)) count++; if (i + 1 < m && j - 1 >= 0 && (board[i + 1][j - 1] == 1 || board[i + 1][j - 1] == 2)) count++; if (i + 1 < m && (board[i + 1][j] == 1 || board[i + 1][j] == 2)) count++; if (i + 1 < m && j + 1 < n && (board[i + 1][j + 1] == 1 || board[i + 1][j + 1] == 2)) count++; if (board[i][j] == 1 && (count == 3 || count == 2)) return true; if (board[i][j] == 0 && count == 3) return true; return false; } }; |

Time & Space:

O(n) & O(1)

No user test

Towards Foveated Rendering for Gaze-Tracked Virtual Reality

Pre-User study: Prove the hypothesis that using temporally stable and contrast preserving foveation could improve the threshold. The detail of shading rate choice is not clear in the paper. Needs supplemental material.

Post-User study: Verify that our rendering system indeed achieves the superior image quality predicted by our perceptual target. Instead of estimating a threshold rate of foveation, we estimate the threshold size of the intermediate region between the inner (foveal) and the outer (peripheral) regions.

Adaptive Image‐Space Sampling for Gaze‐Contingent Real‐time Rendering

Foveated Real‐Time Ray Tracing for Head‐Mounted Displays

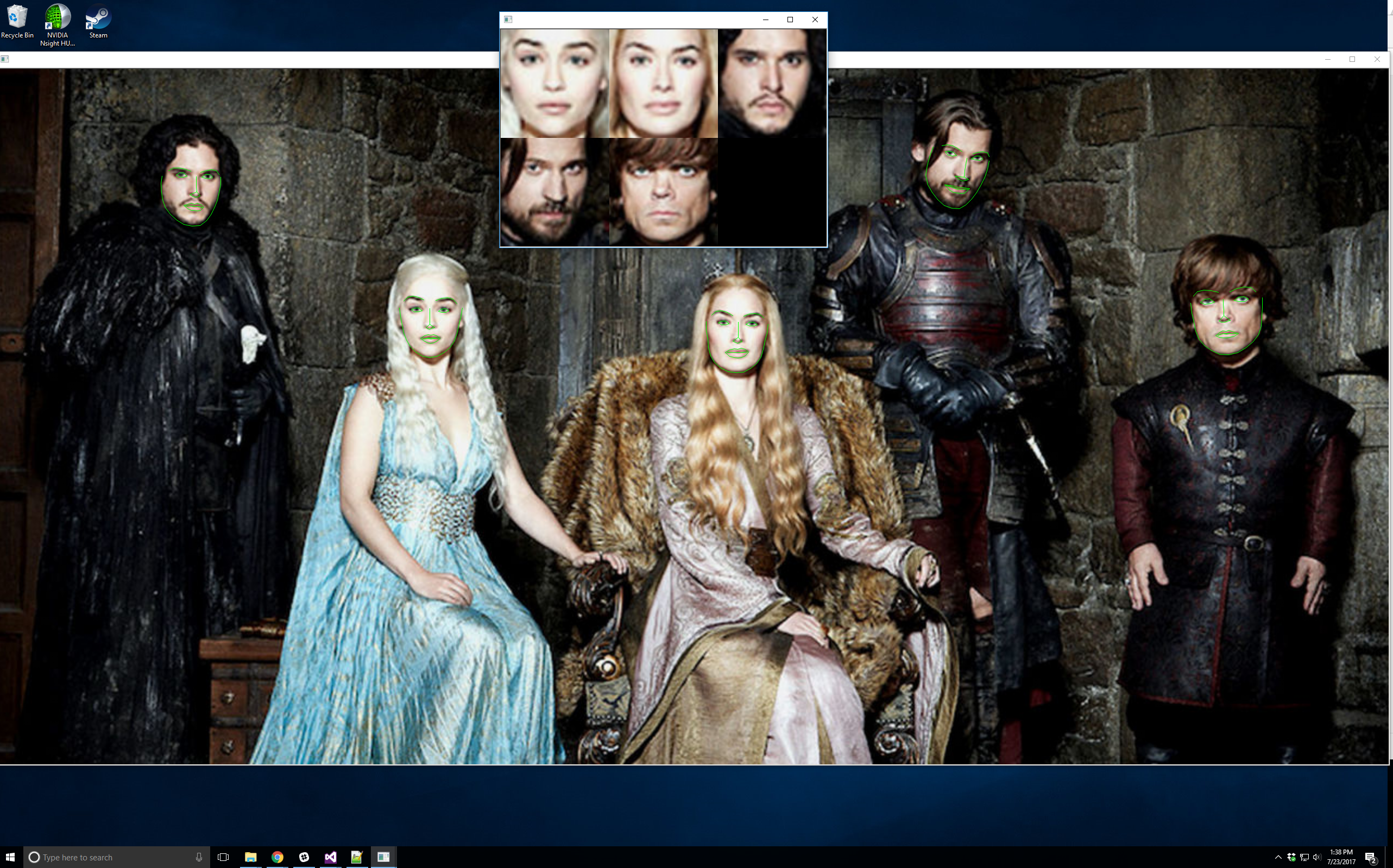

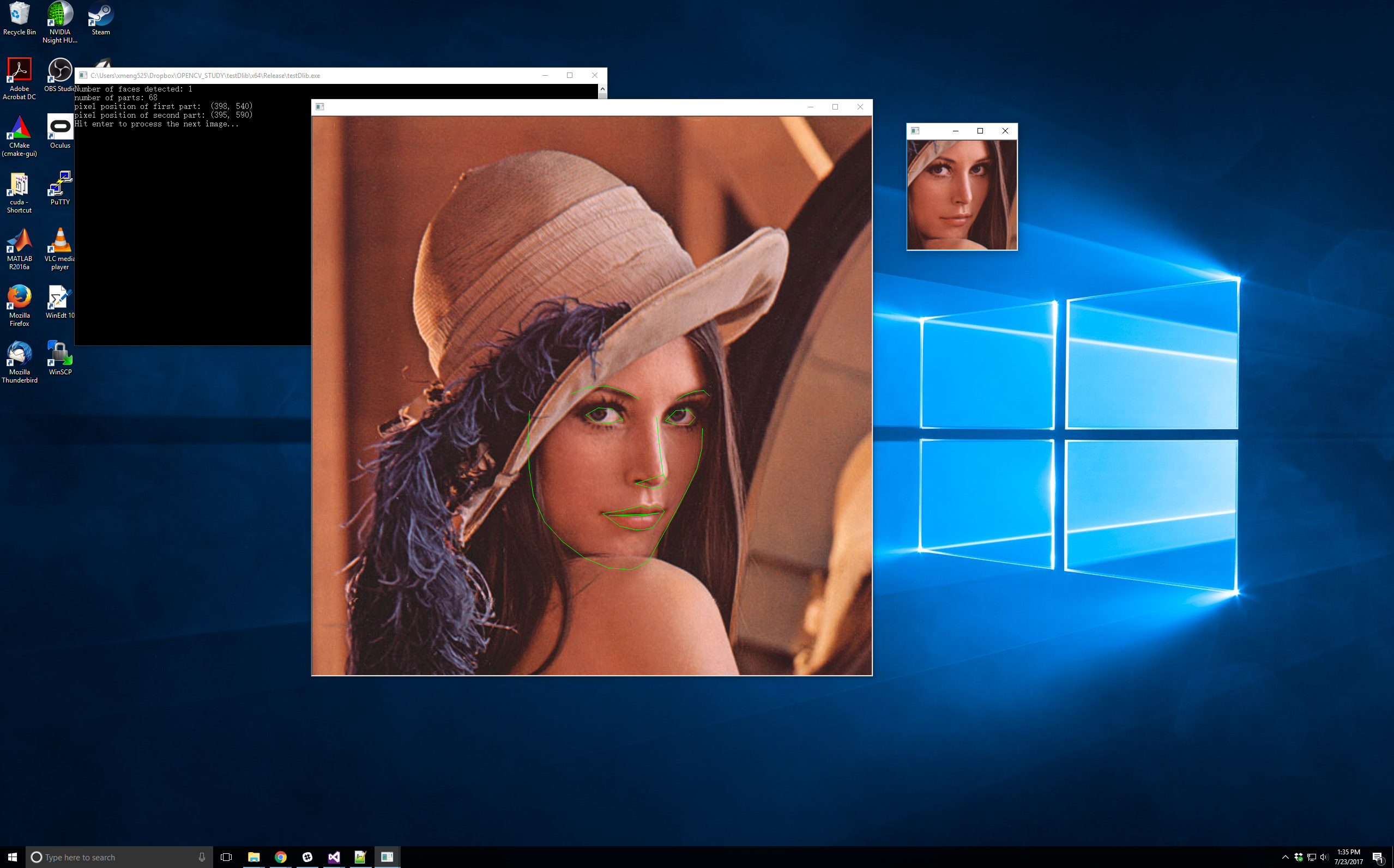

Use the code in http://xiaoxumeng.com/install-dlib-on-visual-studio-2015/ to get landmarks.

The face position coordinates are stored in variable shape.rect (l: left, t: top, r: right, b: bottom).

The face landmarks are stored in variable shape.parts

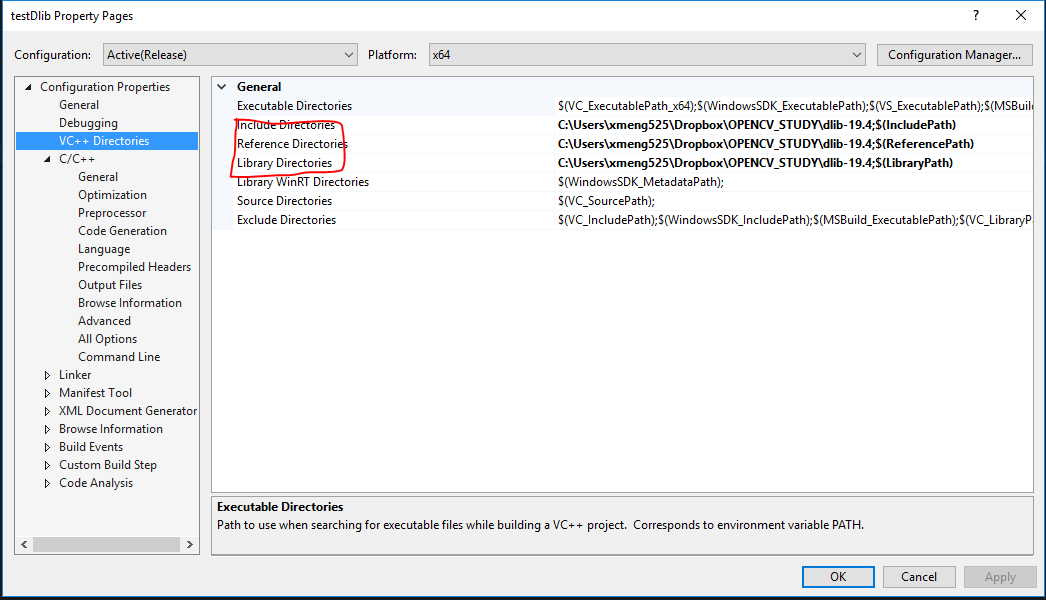

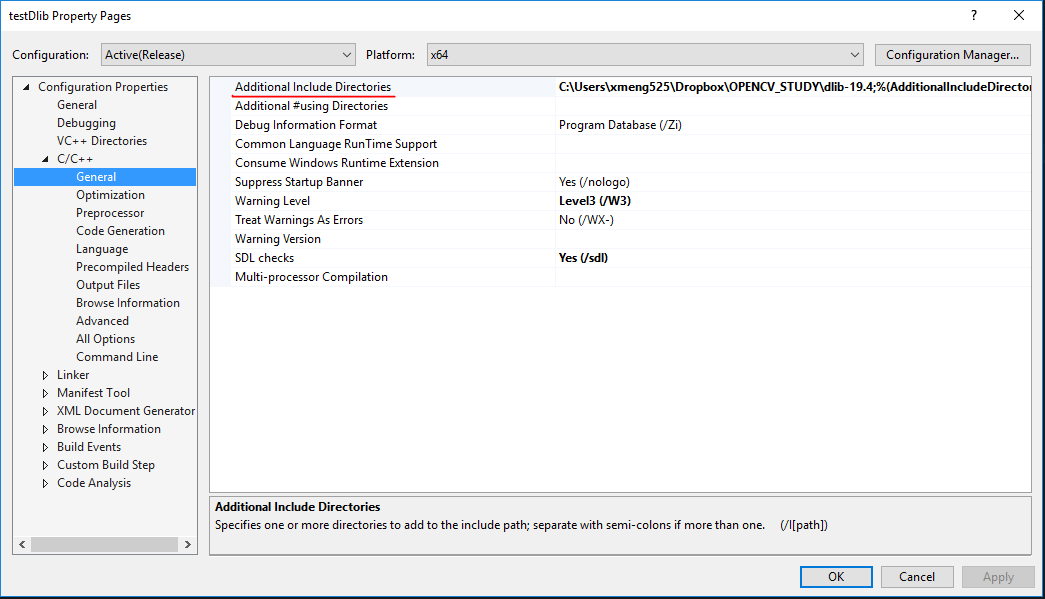

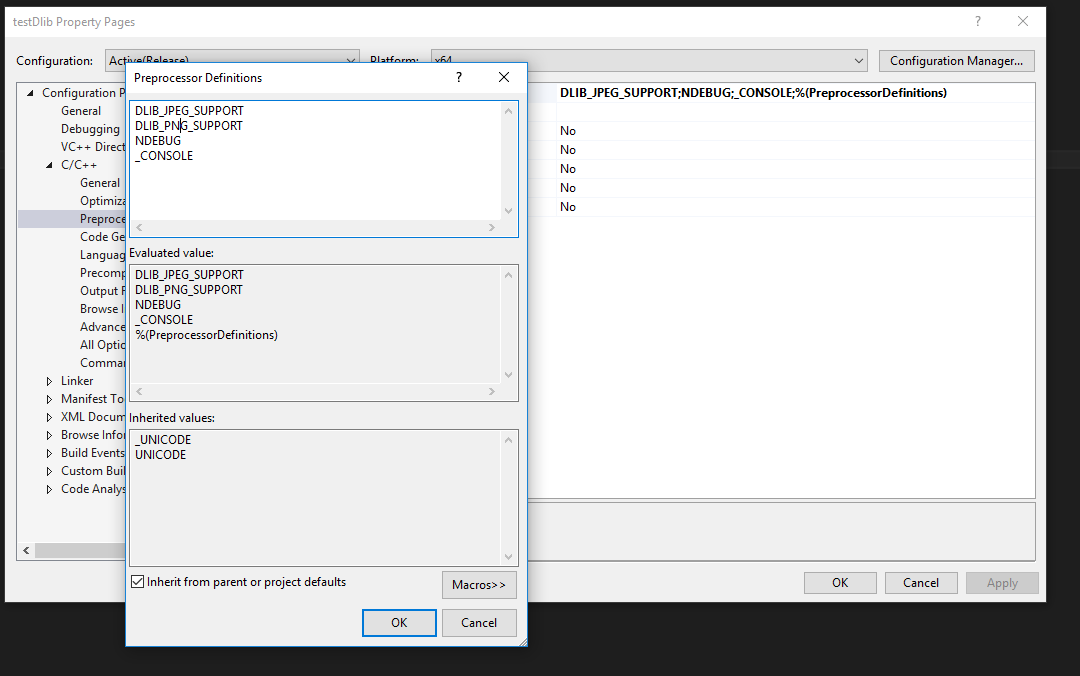

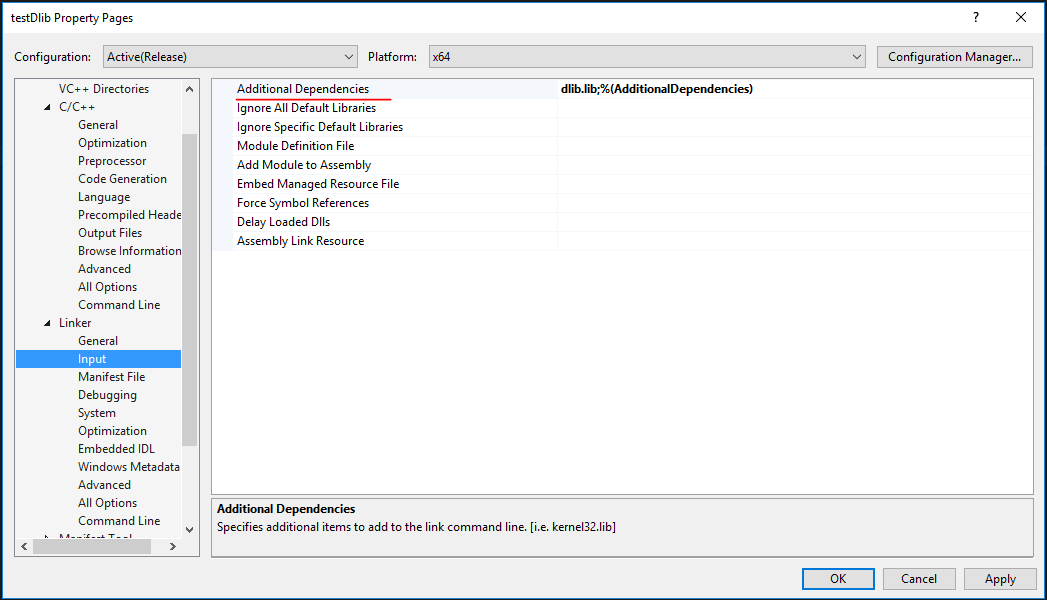

Step1: Download Dlib

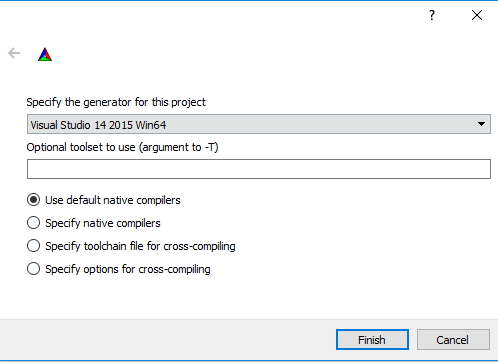

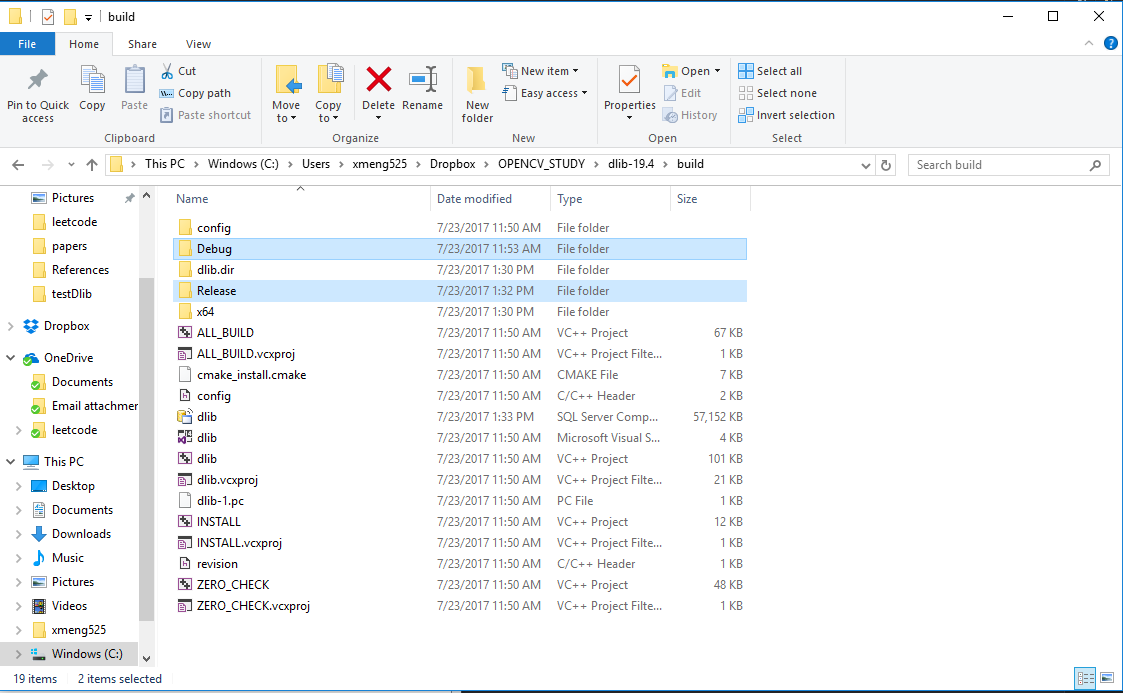

Step2: Use cmake to generate library

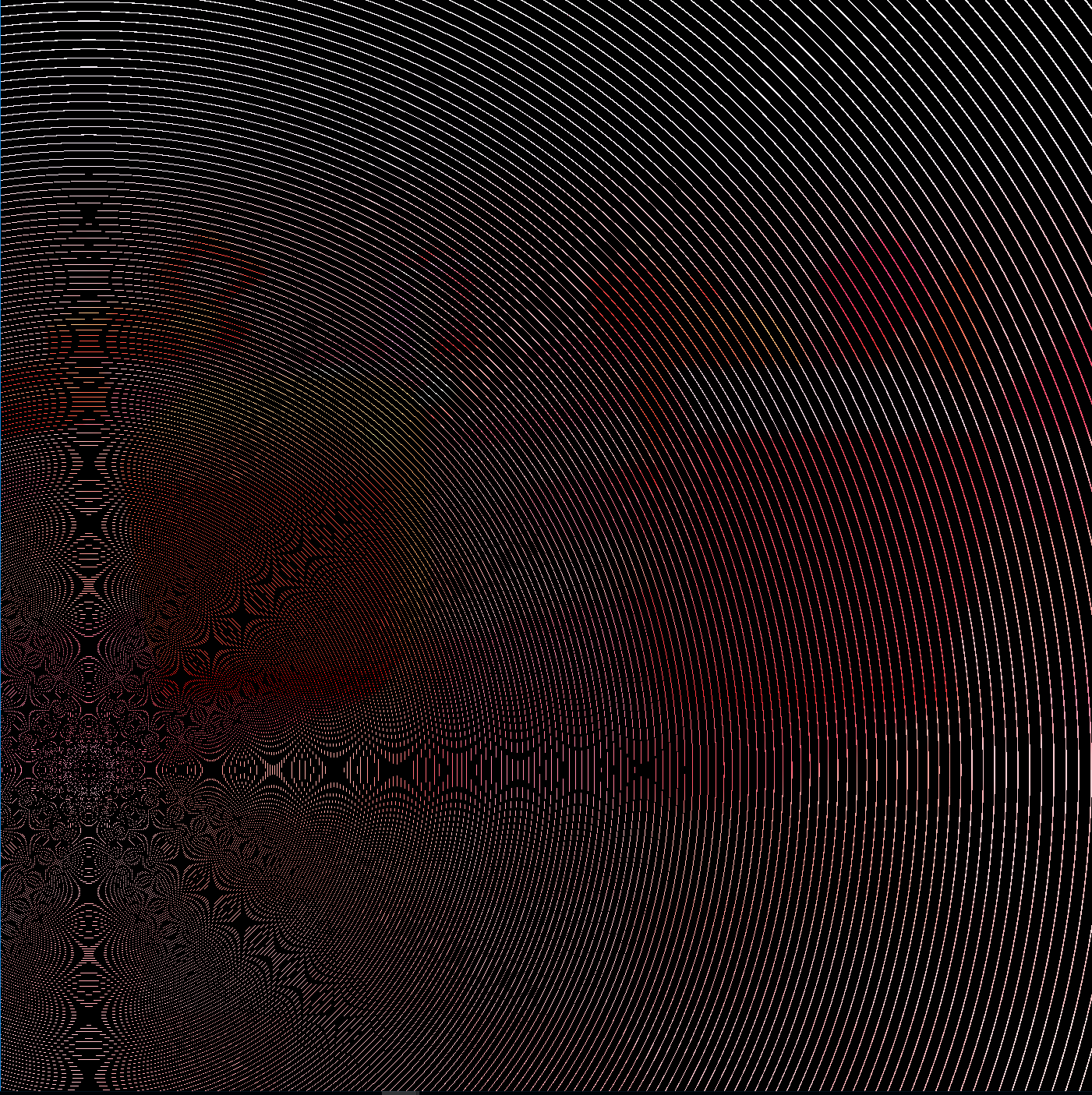

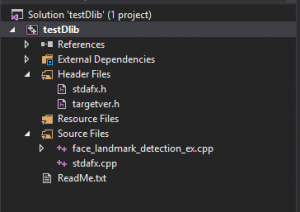

Figure 1

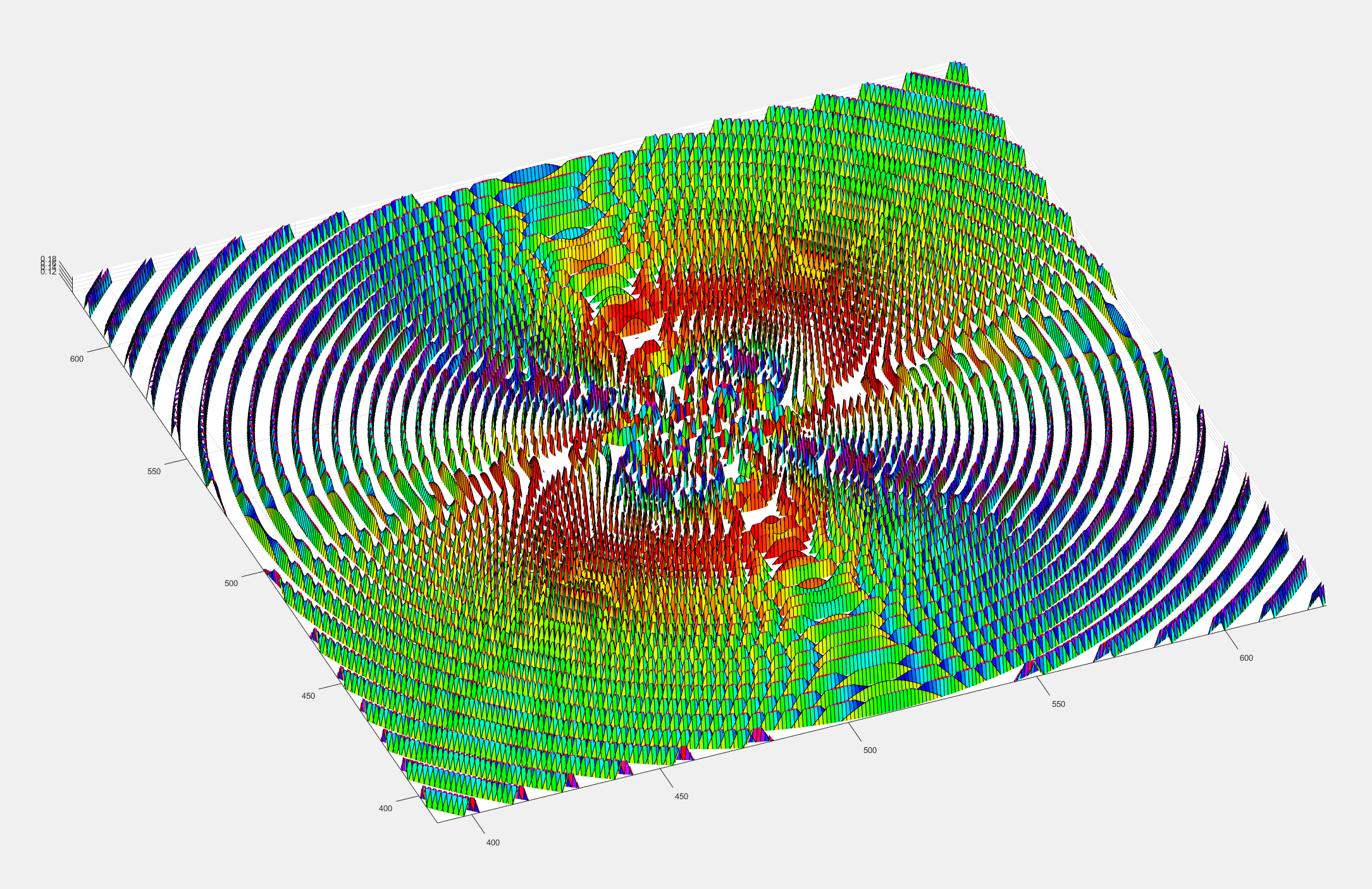

Figure 2

Figure 3

Step3: Build a new win32 console Application for testing.

Step4: Testing.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 |

#include "stdafx.h" #include <dlib/image_processing/frontal_face_detector.h> #include <dlib/image_processing/render_face_detections.h> #include <dlib/image_processing.h> #include <dlib/gui_widgets.h> #include <dlib/image_io.h> #include using namespace dlib; using namespace std; // ---------------------------------------------------------------------------------------- int main(int argc, char** argv) { try { // We need a face detector. We will use this to get bounding boxes for // each face in an image. frontal_face_detector detector = get_frontal_face_detector(); // And we also need a shape_predictor. This is the tool that will predict face // landmark positions given an image and face bounding box. Here we are just // loading the model from the shape_predictor_68_face_landmarks.dat file you gave // as a command line argument. shape_predictor sp; deserialize("shape_predictor_68_face_landmarks.dat") >> sp; image_window win, win_faces; // Loop over all the images provided on the command line. //for (int i = 2; i < argc; ++i) //{ //cout << "processing image " << argv[i] << endl; array2d img; string img_path = "got.jpg"; //load_image(img, argv[i]); load_image(img, img_path); // Make the image larger so we can detect small faces. pyramid_up(img); // Now tell the face detector to give us a list of bounding boxes // around all the faces in the image. std::vector dets = detector(img); cout << "Number of faces detected: " << dets.size() << endl; // Now we will go ask the shape_predictor to tell us the pose of // each face we detected. std::vector shapes; for (unsigned long j = 0; j < dets.size(); ++j) { full_object_detection shape = sp(img, dets[j]); cout << "number of parts: "<< shape.num_parts() << endl; cout << "pixel position of first part: " << shape.part(0) << endl; cout << "pixel position of second part: " << shape.part(1) << endl; // You get the idea, you can get all the face part locations if // you want them. Here we just store them in shapes so we can // put them on the screen. shapes.push_back(shape); } // Now let's view our face poses on the screen. win.clear_overlay(); win.set_image(img); win.add_overlay(render_face_detections(shapes)); // We can also extract copies of each face that are cropped, rotated upright, // and scaled to a standard size as shown here: dlib::array<array2d > face_chips; extract_image_chips(img, get_face_chip_details(shapes), face_chips); win_faces.set_image(tile_images(face_chips)); cout << "Hit enter to process the next image..." << endl; cin.get(); //} } catch (exception& e) { cout << "\nexception thrown!" << endl; cout << e.what() << endl; } } |

|

1 2 3 4 5 6 7 8 |

//#ifdef ENABLE_ASSERTS // extern int USER_ERROR__missing_dlib_all_source_cpp_file__OR__inconsistent_use_of_DEBUG_or_ENABLE_ASSERTS_preprocessor_directives; // inline int dlib_check_consistent_assert_usage() { USER_ERROR__missing_dlib_all_source_cpp_file__OR__inconsistent_use_of_DEBUG_or_ENABLE_ASSERTS_preprocessor_directives = 0; return 0; } //#else // extern int USER_ERROR__missing_dlib_all_source_cpp_file__OR__inconsistent_use_of_DEBUG_or_ENABLE_ASSERTS_preprocessor_directives_; // inline int dlib_check_consistent_assert_usage() { USER_ERROR__missing_dlib_all_source_cpp_file__OR__inconsistent_use_of_DEBUG_or_ENABLE_ASSERTS_preprocessor_directives_ = 0; return 0; } //#endif // const int dlib_check_assert_helper_variable = dlib_check_consistent_assert_usage(); |

Step5: Get results

Description:

https://leetcode.com/problems/move-zeroes/#/description

Algorithm:

Code:

|

1 2 3 4 5 6 7 8 |

class Solution { public: void moveZeroes(vector& nums) { int numNonZero = 0; for (int i = 0; i < nums.size();i++) if (nums[i] != 0) swap(nums[numNonZero++], nums[i]); } }; |

Time & Space:

O(n) & O(1)

Description:

https://leetcode.com/problems/missing-number/#/description

Algorithm:

Code1:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

class Solution1 { public: int missingNumber(vector& nums) { int sumAll = 0; int sum = 0; for (int i = 0; i < nums.size() + 1;i++) sumAll += i; for (int i = 0; i < nums.size();i++) sum += nums[i]; return sumAll - sum; } }; |

Code2:

|

1 2 3 4 5 6 7 8 9 10 11 |

class Solution { public: int missingNumber(vector& nums) { int res = nums.size(); for (int i = 0; i < nums.size(); i++) { res ^= nums[i]; //xor res ^= i; } return res; } }; |

Time & Space:

O(n) & O(1)